Visual Word2Vec (vis-w2v): Learning Visually Grounded Word Embeddings Using Abstract Scenes

People:

Satwik Kottur [Page]

Ramakrishna Vedantam [Page]

José M. F. Moura [Page]

Devi Parikh [Page]

Abstract:

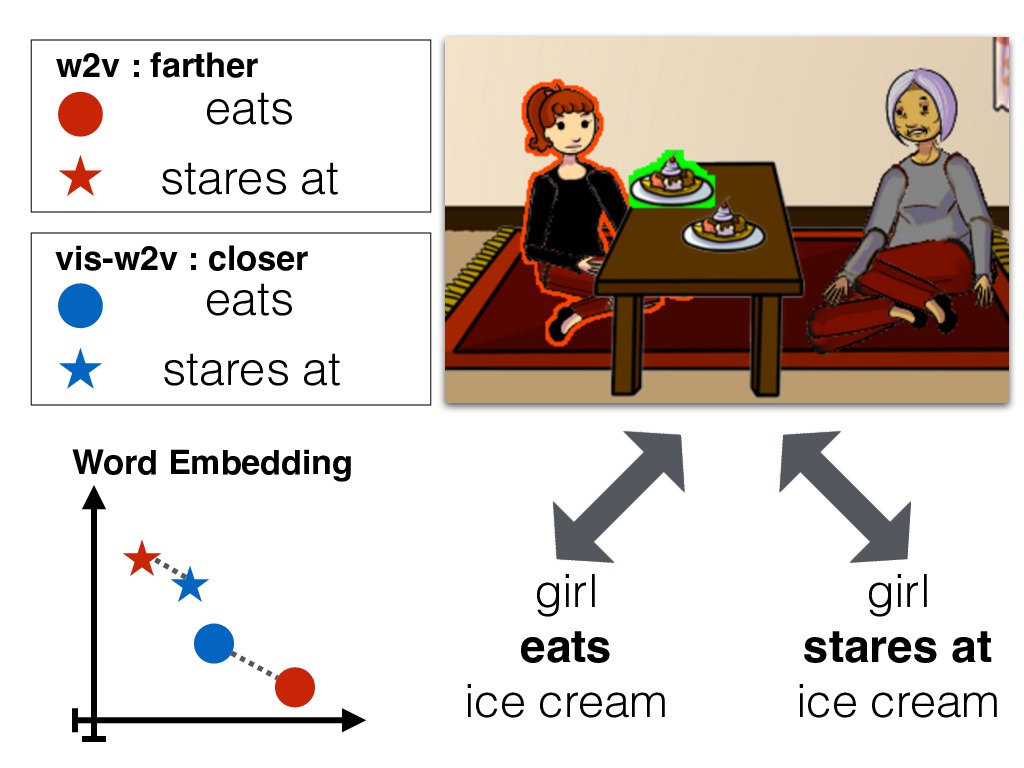

We propose a model to learn visually grounded word embeddings (vis-w2v) to capture visual notions of semantic relatedness. While word embeddings trained using text have been extremely successful, they cannot uncover notions of semantic relatedness implicit in our visual world. For instance, although "eats" and "stares at" seem unrelated in text, they share semantics visually. When people are eating something, they also tend to stare at the food. Grounding diverse relations like "eats" and "stares at" into vision remains challenging, despite recent progress in vision. We note that the visual grounding of words depends on semantics, and not the literal pixels. We thus use abstract scenes created from clipart to provide the visual grounding. We find that the embeddings we learn capture fine-grained, visually grounded notions of semantic relatedness. We show improvements over text-only word embeddings (word2vec) on three tasks: common-sense assertion classification, visual paraphrasing and text-based image retrieval.Paper:

Satwik Kottur*, Ramakrishna Vedantam*, José M. F. Moura, Devi Parikh

Visual Word2Vec (vis-w2v): Learning Visually Grounded Word Embeddings Using Abstract Scenes

in proceedings of IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016

*=equal contribution

[Paper] [Project Page] [Poster]

BibTeX:

@article{DBLP:journals/corr/KotturVMP15,

author = {Satwik Kottur and

Ramakrishna Vedantam and

Jos{\'{e}} M. F. Moura and

Devi Parikh},

title = {Visual Word2Vec (vis-w2v): Learning Visually Grounded Word Embeddings

Using Abstract Scenes},

journal = {CoRR},

volume = {abs/1511.07067},

year = {2015},

url = {http://arxiv.org/abs/1511.07067},

}Contact:

For any questions, feel free to contact Satwik Kottur or Ramakrishna Vedantam.